Monitoring

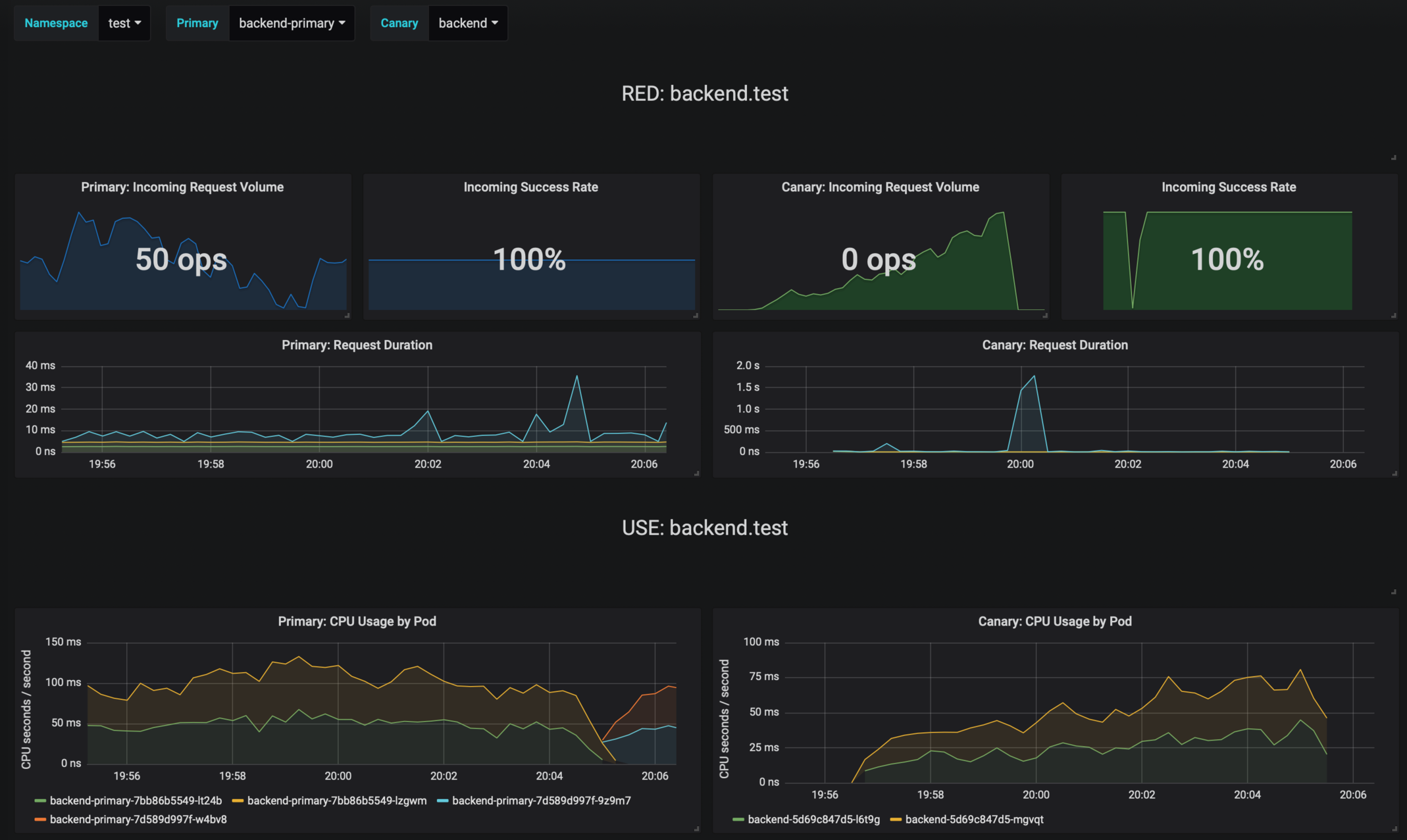

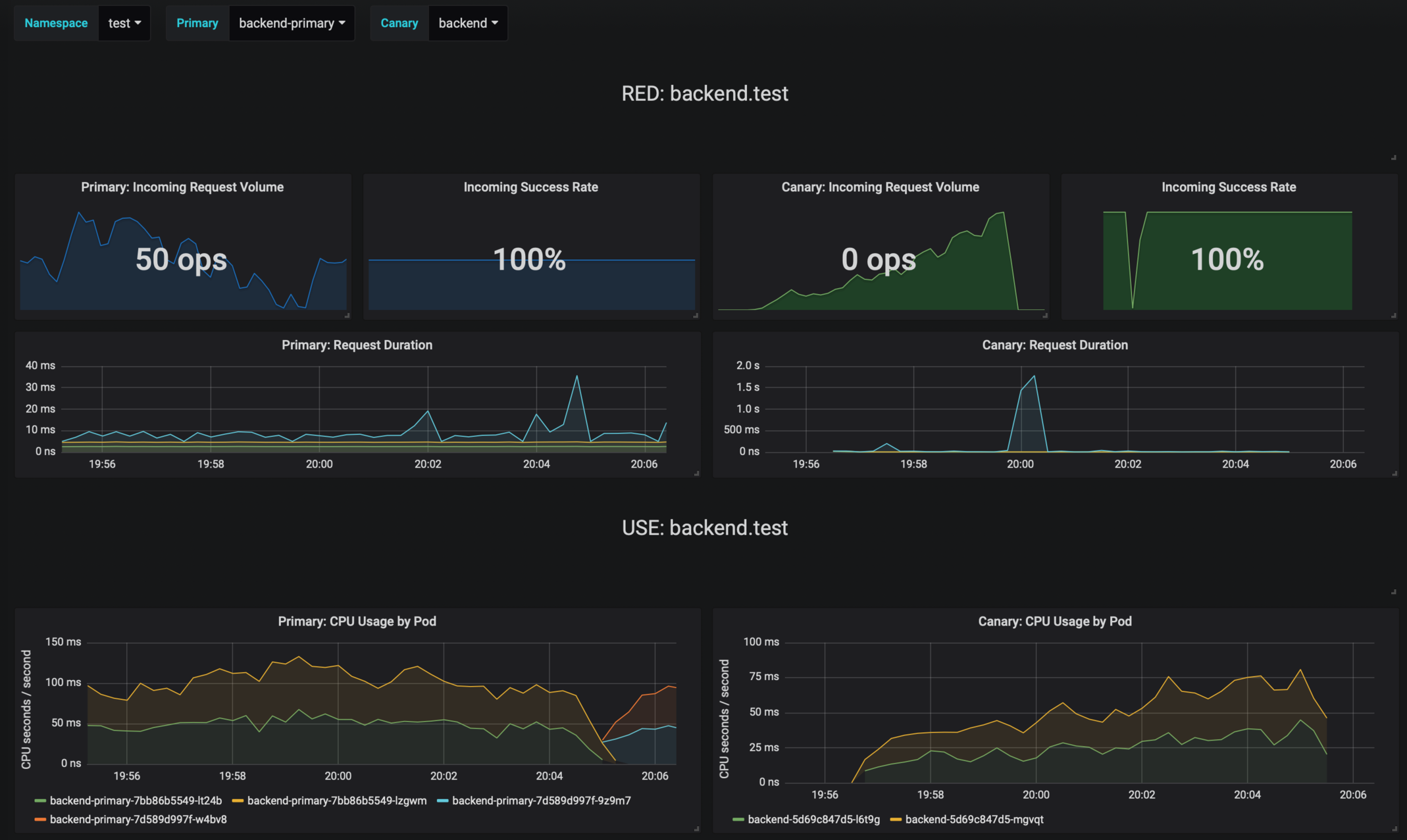

Grafana

helm upgrade -i flagger-grafana flagger/grafana \

--set url=http://prometheus:9090

Logging

Event Webhook

Metrics

Last updated

Was this helpful?

helm upgrade -i flagger-grafana flagger/grafana \

--set url=http://prometheus:9090

Last updated

Was this helpful?

Was this helpful?

kubectl -n istio-system logs deployment/flagger --tail=100 | jq .msg

Starting canary deployment for podinfo.test

Advance podinfo.test canary weight 5

Advance podinfo.test canary weight 10

Advance podinfo.test canary weight 15

Advance podinfo.test canary weight 20

Advance podinfo.test canary weight 25

Advance podinfo.test canary weight 30

Advance podinfo.test canary weight 35

Halt podinfo.test advancement success rate 98.69% < 99%

Advance podinfo.test canary weight 40

Halt podinfo.test advancement request duration 1.515s > 500ms

Advance podinfo.test canary weight 45

Advance podinfo.test canary weight 50

Copying podinfo.test template spec to podinfo-primary.test

Halt podinfo-primary.test advancement waiting for rollout to finish: 1 old replicas are pending termination

Scaling down podinfo.test

Promotion completed! podinfo.testhelm upgrade -i flagger flagger/flagger \

--set eventWebhook=https://example.com/flagger-canary-event-webhook{

"name": "string (canary name)",

"namespace": "string (canary namespace)",

"phase": "string (canary phase)",

"metadata": {

"eventMessage": "string (canary event message)",

"eventType": "string (canary event type)",

"timestamp": "string (unix timestamp ms)"

}

}{

"name": "podinfo",

"namespace": "default",

"phase": "Progressing",

"metadata": {

"eventMessage": "New revision detected! Scaling up podinfo.default",

"eventType": "Normal",

"timestamp": "1578607635167"

}

} analysis:

webhooks:

- name: "send to Slack"

type: event

url: http://event-recevier.notifications/slack# Flagger version and mesh provider gauge

flagger_info{version="0.10.0", mesh_provider="istio"} 1

# Canaries total gauge

flagger_canary_total{namespace="test"} 1

# Canary promotion last known status gauge

# 0 - running, 1 - successful, 2 - failed

flagger_canary_status{name="podinfo" namespace="test"} 1

# Canary traffic weight gauge

flagger_canary_weight{workload="podinfo-primary" namespace="test"} 95

flagger_canary_weight{workload="podinfo" namespace="test"} 5

# Seconds spent performing canary analysis histogram

flagger_canary_duration_seconds_bucket{name="podinfo",namespace="test",le="10"} 6

flagger_canary_duration_seconds_bucket{name="podinfo",namespace="test",le="+Inf"} 6

flagger_canary_duration_seconds_sum{name="podinfo",namespace="test"} 17.3561329

flagger_canary_duration_seconds_count{name="podinfo",namespace="test"} 6

# Last canary metric analysis result per different metrics

flagger_canary_metric_analysis{metric="podinfo-http-successful-rate",name="podinfo",namespace="test"} 1

flagger_canary_metric_analysis{metric="podinfo-custom-metric",name="podinfo",namespace="test"} 0.918223108974359

# Canary successes total counter

flagger_canary_successes_total{name="podinfo",namespace="test",deployment_strategy="canary",analysis_status="completed"} 5

# Canary failures total counter

flagger_canary_failures_total{name="podinfo",namespace="test",deployment_strategy="canary",analysis_status="completed"} 1