Blue/Green Deployments

This guide shows you how to automate Blue/Green deployments with Flagger and Kubernetes.

For applications that are not deployed on a service mesh, Flagger can orchestrate Blue/Green style deployments with Kubernetes L4 networking. When using a service mesh blue/green can be used as specified here.

Prerequisites

Flagger requires a Kubernetes cluster v1.16 or newer.

Install Flagger and the Prometheus add-on:

If you already have a Prometheus instance running in your cluster, you can point Flagger to the ClusterIP service with:

Optionally you can enable Slack notifications:

Bootstrap

Flagger takes a Kubernetes deployment and optionally a horizontal pod autoscaler (HPA), then creates a series of objects (Kubernetes deployment and ClusterIP services). These objects expose the application inside the cluster and drive the canary analysis and Blue/Green promotion.

Create a test namespace:

Create a deployment and a horizontal pod autoscaler:

Deploy the load testing service to generate traffic during the analysis:

Create a canary custom resource:

The above configuration will run an analysis for five minutes.

Save the above resource as podinfo-canary.yaml and then apply it:

After a couple of seconds Flagger will create the canary objects:

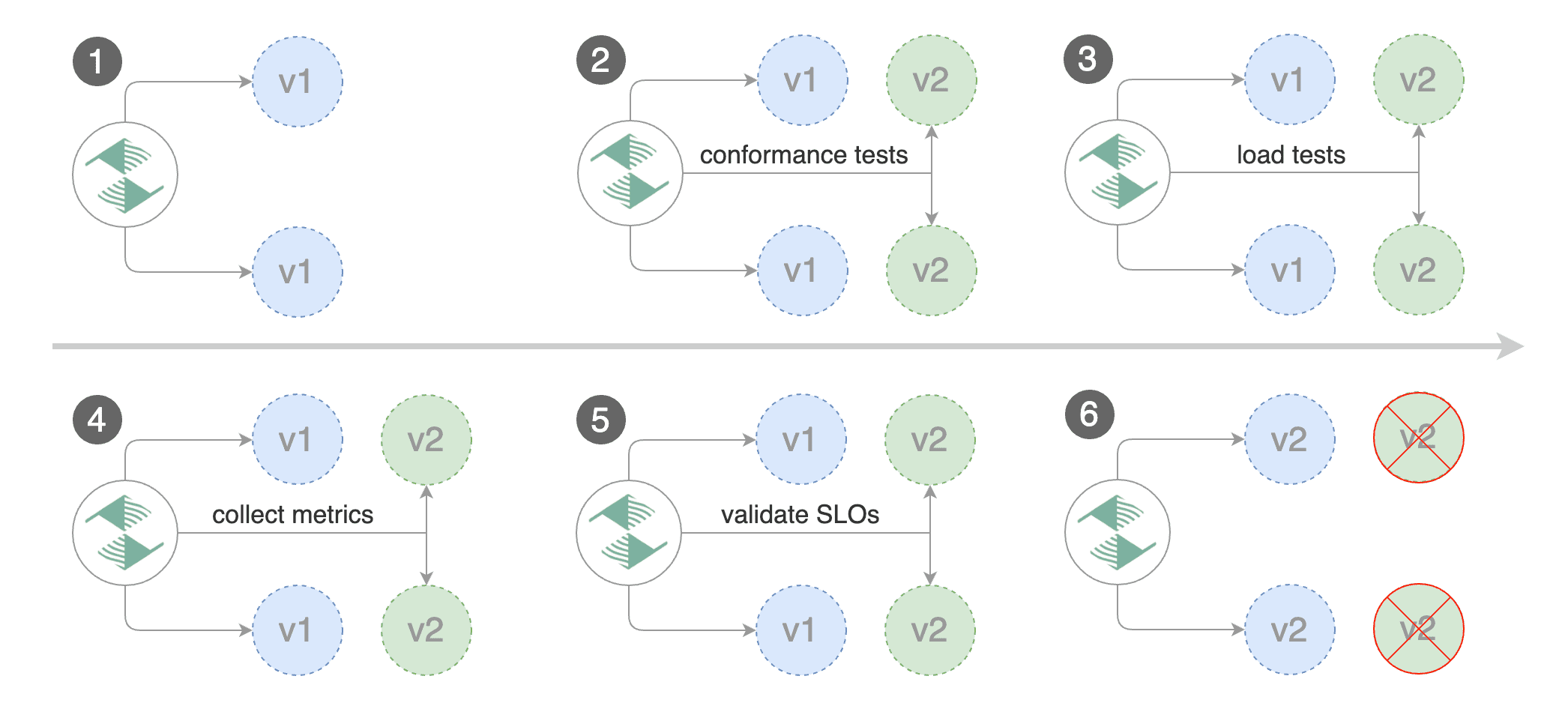

Blue/Green scenario:

on bootstrap, Flagger will create three ClusterIP services (

app-primary,app-canary,app)and a shadow deployment named

app-primarythat represents the blue versionwhen a new version is detected, Flagger would scale up the green version and run the conformance tests

(the tests should target the

app-canaryClusterIP service to reach the green version)if the conformance tests are passing, Flagger would start the load tests and validate them with custom Prometheus queries

if the load test analysis is successful, Flagger will promote the new version to

app-primaryand scale down the green version

Automated Blue/Green promotion

Trigger a deployment by updating the container image:

Flagger detects that the deployment revision changed and starts a new rollout:

Note that if you apply new changes to the deployment during the canary analysis, Flagger will restart the analysis.

You can monitor all canaries with:

Automated rollback

During the analysis you can generate HTTP 500 errors and high latency to test Flagger's rollback.

Exec into the load tester pod with:

Generate HTTP 500 errors:

Generate latency:

When the number of failed checks reaches the analysis threshold, the green version is scaled to zero and the rollout is marked as failed.

Custom metrics

The analysis can be extended with Prometheus queries. The demo app is instrumented with Prometheus so you can create a custom check that will use the HTTP request duration histogram to validate the canary (green version).

Create a metric template and apply it on the cluster:

Edit the canary analysis and add the following metric:

The above configuration validates the canary (green version) by checking if the HTTP 404 req/sec percentage is below 5 percent of the total traffic. If the 404s rate reaches the 5% threshold, then the rollout is rolled back.

Trigger a deployment by updating the container image:

Generate 404s:

Watch Flagger logs:

If you have alerting configured, Flagger will send a notification with the reason why the canary failed.

Conformance Testing with Helm

Flagger comes with a testing service that can run Helm tests when configured as a pre-rollout webhook.

Deploy the Helm test runner in the kube-system namespace using the tiller service account:

When deployed the Helm tester API will be available at http://flagger-helmtester.kube-system/.

Add a helm test pre-rollout hook to your chart:

When the canary analysis starts, Flagger will call the pre-rollout webhooks. If the helm test fails, Flagger will retry until the analysis threshold is reached and the canary is rolled back.

For an in-depth look at the analysis process read the usage docs.

Last updated

Was this helpful?